Logistic Regression

10 mins read. Logistic Regression with a Neural Network mindset.

Logistic Regression

Logistic Regression is a statistical method used for binary classification. Despite its name, it is employed for predicting the probability of an instance belonging to a particular class. It models the relationship between input features and the log odds of the event occurring, where the event is typically represented by the binary outcome (0 or 1).

The output of logistic regression is transformed using the logistic function (sigmoid), which maps any real-valued number to a value between 0 and 1. This transformed value can be interpreted as the probability of the instance belonging to the positive class.

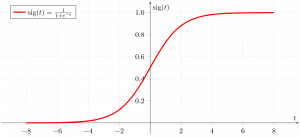

Sigmoid Function

Now we use the sigmoid function where the input will be z and we find the probability between 0 and 1. i.e. predicted y.

As shown above, the figure sigmoid function converts the continuous variable data into the probability i.e. between 0 and 1.

𝜎(𝑧) tends towards 1 as 𝑧→∞

𝜎(𝑧) tends towards 0 as 𝑧→−∞

𝜎(𝑧) is always bounded between 0 and 1

where the probability of being a class can be measured as:

𝑃(𝑦=1)=𝜎(𝑧)

𝑃(𝑦=0)=1−𝜎(𝑧)

Cost Function

A cost function is a mathematical function that calculates the difference between the target actual values (ground truth) and the values predicted by the model. A function that assesses a machine learning model’s performance also referred to as a loss function or objective function. Usually, the objective of a machine learning algorithm is to reduce the error or output of cost function.

Plotting this specific error function against the linear regression model’s weight parameters results in a convex shape. This convexity is important because it allows the Gradient Descent Algorithm to be used to optimize the function. Using this algorithm, we can locate the global minima on the graph and modify the model’s weights to systematically lower the error. In essence, it’s a means of optimizing the model to raise its accuracy in making predictions.

Log Loss for Logistic regression

Log loss is a classification evaluation metric that is used to compare different models which we build during the process of model development. It is considered one of the efficient metrics for evaluation purposes while dealing with the soft probabilities predicted by the model.

The log of corrected probabilities, in logistic regression, is obtained by taking the natural logarithm (base e) of the predicted probabilities.

Hence, The Log Loss can be summarized with the following formula:

where,

m is the number of training examples

is the true class label for the i-th example (either 0 or 1).

is the predicted probability for the i-th example, as calculated by the logistic regression model.

is the model parameters

In summary:

Calculate predicted probabilities using the sigmoid function.

Apply the natural logarithm to the corrected probabilities.

Sum up and average the log values, then negate the result to get the Log Loss.

Forward and Backward propagation

Implement a function propagate() that computes the cost function and its gradient.

Forward Propagation:

get X

compute A=σ(wTX+b)=(a(1),a(2),...,a(m−1),a(m))

calculate the cost function: J=−m1∑i=1m(y(i)log(a(i))+(1−y(i))log(1−a(i)))

Here are the two formulas you will be using:

Reference

Last updated